1 Overview of The HBM Industry

1.1 Product Description

HBM stands for High Bandwidth Memory. It is a DDR array that realizes high speed, large capacity, low latency and low power consumption by vertically stacking DRAM and binding and packaging it with the GPU.

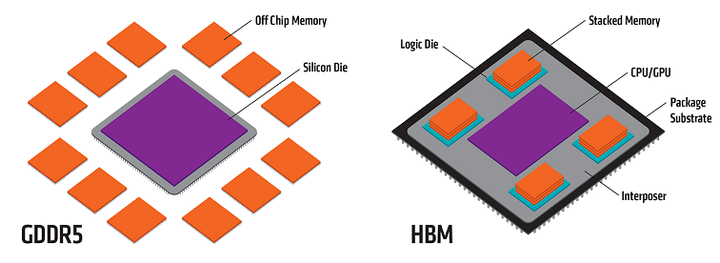

Exhibit 1: Comparison of HBM and GDDR5 Products

High Bandwidth Memory (HBM) and Dynamic Random Access Memory (DRAM) are both types of Random Access Memory (RAM), which is the main memory in a computer used for temporarily storing running programs and data. HBM stands out with its stacked architecture, allowing it to deliver higher bandwidth and capacity in a compact form.

HBM can be organized into two stacks, each with 8 channels, which can be further divided into two pseudo-channels, totaling 32 pseudo-channels. This design significantly boosts data transfer rates. HBM is available in storage sizes ranging from 2GB to 128GB, with a maximum capacity of 16GB per stack, making it ideal for high-performance applications like AI servers and GPUs.

As technology continues to advance, HBM is expected to play an increasingly important role in various high-performance applications, driven by its ability to deliver high bandwidth and large capacity in a compact form factor. This makes it a preferred choice for cutting-edge systems that require exceptional memory performance.

Exhibit 2: HBM Product Structure

The characteristics of HBM:

- BM stacks do not connect to the GPU/CPU/SoC through external interconnects but rather connect compactly and quickly through an intermediate layer to the signal processor chips. Compared to traditional von Neumann computing architecture, HBM provides extremely high memory bandwidth by stacking multiple layers of DDR, offering a substantial amount of parallel processing capability. This design brings data parameters closer to the core computing unit, effectively reducing data transfer latency and power consumption.

- In chip design, HBM connects compactly and quickly through an intermediate layer, which makes it unique in saving space and enables CPU/GPU products to be more lightweight.

- Because HBM requires a large amount of DRAM stacking, its manufacturing demands higher craftsmanship and technology, leading to increased costs. Additionally, efficient heat dissipation remains a challenge in its design and production.

Classification of HBM:

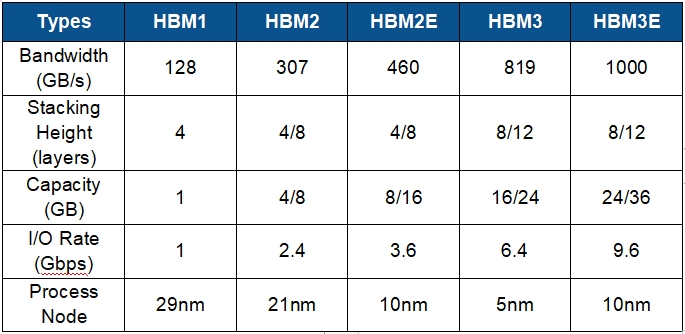

Currently, HBM products are developed in the following sequence: HBM (first generation), HBM2 (second generation), HBM2E (third generation), HBM3 (fourth generation), and HBM3E (fifth generation), with the latest HBM3E being an extended version of HBM3. GPUs now typically have 2, 4, 6, or 8 stacks, with a maximum of 12 layers stacked vertically.

Exhibit 3: Comparison of HBM Product Series Parameter

In terms of market size, the HBM market is expected to quadruple to $16.9 billion by 2024. According to Trendforce, HBM accounted for about 8.4% of the overall DRAM industry in 2023, with a market size of around $4.356 billion. By the end of 2024, this figure is projected to reach $16.914 billion, accounting for about 20.1% of the overall DRAM output value. Goldman Sachs also notes that the HBM market size will grow at a compound annual growth rate (CAGR) of nearly 100% from 2023 to 2026, reaching $30 billion by 2026.

The unit price of HBM is several times higher than that of traditional DRAM, with a price difference of about five times compared to DDR5. For example, the NVIDIA H100 is equipped with five HBM2E modules, each with a capacity of 16GB, stacked in 8 layers with 2GBper layer. The total HBM2E capacity required for each H100 is 80GB, with a unit price of $10-20 per GB. The NVIDIA H100 costs nearly $3,000, with HBM2E alone accounting for around $1,600.

In 2024, the average monthly production capacity of SK Hynix and Samsung for HBM is 85,000 units, while Micron's capacity is 12,000 units. Currently, the HBM supply capabilities of the three major memory chip manufacturers, SK Hynix, Micron and Samsung, are fully exhausted for this year, with most of next year's capacity already sold out. According to professional analysis, the dynamic gap in HBM demand for this year and next year is estimated to be about 5.5% and 3.5% of the production capacity, respectively.

The primary end products for HBM are AI servers, with cloud service providers being the ultimate consumers. The main application scenario for HBM is AI servers, and the latest generation, HBM3e, is featured in NVIDIA's H200, released in 2023. According to TrendForce, AI server shipments reached 860,000 units in 2022 and are expected to exceed 2 million units by 2026, with a compound annual growth rate of 29%. The growth in AI server shipments is driving a surge in HBM demand.

In the HBM industry, major players like SK Hynix, Samsung, and Micron Technologies have established themselves as the dominant manufacturers. These companies are at the forefront of technological advancements, with a strong focus on innovation and production efficiency. They are expected to initiate mass production of the fifth-generation HBM3E by 2024, which will offer significant improvements in terms of performance and energy efficiency compared to the previous generation.

The HBM3E technology is anticipated to deliver higher bandwidth, increased capacity, and reduced power consumption, making it ideal for applications in high-performance computing, artificial intelligence, and gaming. This new generation of HBM is also expected to support higher data transfer rates and improved thermal management, which are crucial for devices that require high-speed data processing and power efficiency.

In addition to these global leaders, Chinese companies such as YMT and CXMT are emerging as noteworthy competitors. Although they are currently in the construction phase and have not yet started mass production of HBM, their strategic investments in research and development, as well as their aggressive expansion plans, position them as potential disruptors in the market. As they continue to build their manufacturing capabilities and refine their technology, these companies could play a significant role in shaping the future of the HBM industry.