1.HBM Overview

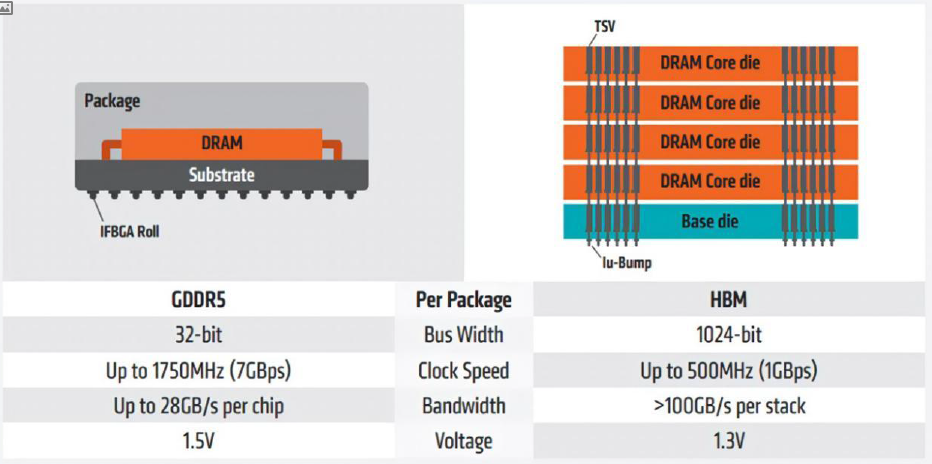

The development of artificial intelligence has led to advancements in related industries. However, the original GDDR5 bandwidth is insufficient for AI computing, hindering the performance growth of GPUs. The cache, which makes up about 60% of the chip's area, and the storage structure and process, are limiting factors for further GPU development. The introduction of HBM has the potential to fully integrate AI deep learning onto the chip, addressing the bottleneck in chip performance improvement.

HBM (High Bandwidth Memory) is a type of high bandwidth DRAM memory chip that utilizes 3D stacking technology to vertically stack multiple DRAM dies. It offers high bandwidth, capacity, transmission, low latency, and power consumption, making it widely used in high performance computing, data centers, and artificial intelligence.

Table1: Differences between GDDR5 and HBM structure diagrams

Source: AMD

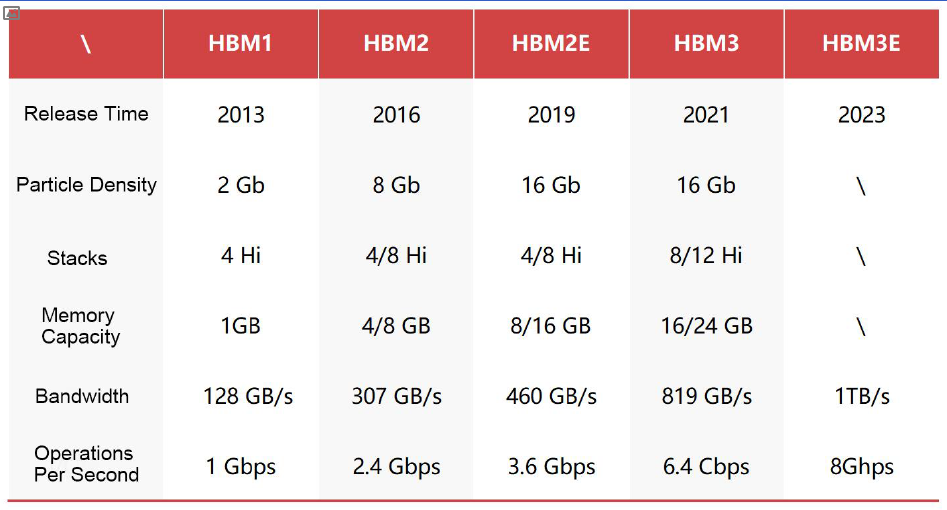

Looking at the data on HBM, the technology first appeared in the semiconductor market in 2013 and has since evolved to its current fifth generation (HBM3E), with previous generations including HBM, HBM2, HBM2E, and HBM3.

Table2: HBM Technology Development

Source: Wikipedia, SK Hynix, DEPEND

In terms of DRAM usage, DDR is commonly used in servers and PCs, while GDDR and LPDDR are used in graphics processing and smartphones, respectively. HBM is primarily used in new high-computing fields such as supercomputers, artificial intelligence, and AI servers.

Table3: Global DRAM Applications and Product Specifications

Source: DEPEND

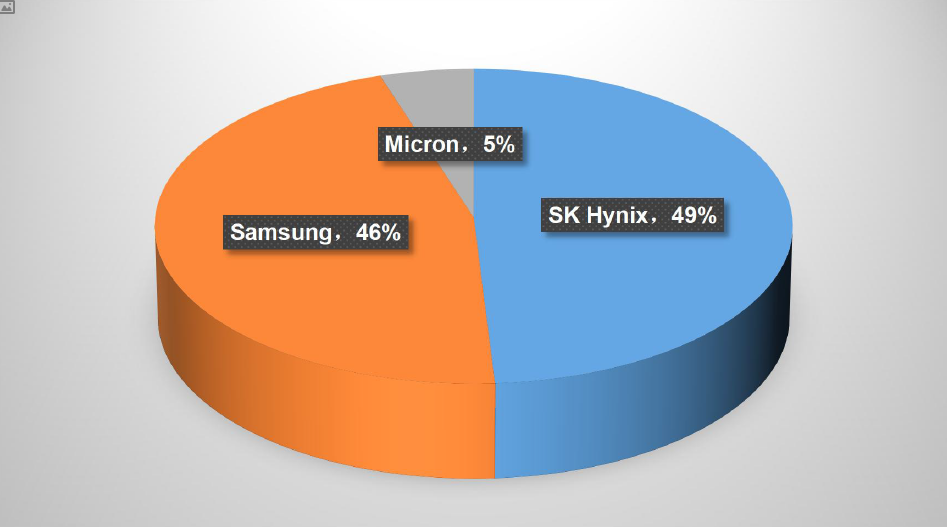

Due to the high technical requirements of HBM, only SK Hynix, Samsung, and Micron currently have stable supply. In 2022, these three companies are expected to hold 50%, 40%, and 10% of the market share, respectively. SK Hynix has an advantage in this market and has already launched HBM3E in August 2023, with plans to develop the next generation of high-bandwidth memory (HBM4). According to TrendForce data, Samsung and SK Hynix are projected to hold 46% and 49% of the HBM market in 2023, respectively, with Micron holding the remaining market share. Samsung is expected to officially supply HBM3 by the end of this year or early next year.

Chart4: 2023 Market Share of Storage Manufacturer

Source: DEPEND

2.HBM Market Intelligence

HBM currently accounts for approximately1.5% of the total DRAM market. Jibang Consulting predicts a 58% annual growth in HBM demand in 2023 and 30% in 2024. Omdia expects the total HBM market will reach $2.5 billion by 2025.